AI ART: 4 Key Trends

Opportunities to Innovate + Engage

- Emotional connections: How does AI-generated content often closely resemble the text it was trained on, potentially lacking the transformative and human element that characterizes genuine creative work? Can AI make something that genuinely made someone feel understood, seen, less alone? We can look at these images as just a collection of pixels, judge them on purely aesthetic appeal, but for so many people, the human element behind the art is actually the most important. It’s not just about looking at an image; it’s about connecting with someone, maybe even feeling the same thing they do.

- Authentic Interactions: In what ways have artists and creatives utilized AI tools to generate unique and innovative pieces, and what are the concerns raised regarding their use in commercial contexts? What are the main types of “artificial intelligence” currently employed by creators, specifically generative pretrained transformers (GPTs) and diffusion models, both falling under the category of artificial neural networks (ANNs)?

- Exploiting the Popular: How has the availability of new AI tools since late 2022 impacted the popularity of AI art among creators? In what ways have artists and creatives utilized AI tools to generate unique and innovative pieces, and what are the concerns raised regarding their use in commercial contexts? What are the copyright concerns raised when AI models consume creators’ work without offering compensation?

- The Warholization of Digital Content: How can creators advocate for the responsible use and regulation of AI technology, and what factors should be considered when evaluating the purpose and value of AI-generated content in the creative field? In the ‘80s, Andy Warhol famously appropriated for his work copyrighted photographs, movie stills, logos, advertisements, and soup cans. Whenever he was sued, he would defend his work as transformative. Warhol claimed he turned these objects into ART.

Excerpt below via SSENSE

Author Liara Roux Weighs in on Everybody’s Favorite New Technology

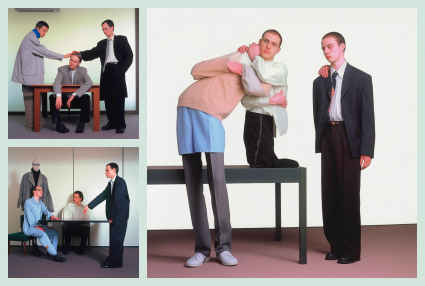

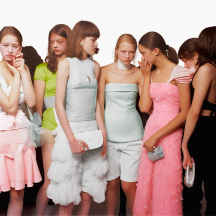

How derivative is too derivative? Imitation, viewed in a certain light, is flattery; in another, it’s theft. Since new tools were made accessible to the public in late 2022, AI art has taken off; my social media feeds are saturated with it. Some artists have made novel pieces with these tools—fashion photographer Charlie Engman harnesses them to create surreal works whose aesthetics neatly fit in his preexisting oeuvre, while St4ngeThing generated streetwear inspired by Renaissance era fashions. Vaquera published gothic images of an eerie runway proclaiming “FASHION IS DEAD.”

It’s easy to feel the power of AI. It’s used to iterate quickly on ideas, without needing to labor IRL on a photo set or digitally in Blender. Certain artists have criticized the use of AI, especially in commercial contexts; they claim it’s being used by companies to rip off their work without payment. The American legal system has developed complex intellectual property laws to govern this dilemma—with the ingress of AI, how do we make sure artificial intelligence is playing by the rules we all must follow?

To start, artificial intelligence might be a misnomer. There are two popular forms of “artificial intelligence” right now: generative pretrained transformers (GPTs) and diffusion models—both forms of artificial neural networks (ANNs).

GPTs are the most common type of ANNs at the moment. They’re the ones responsible for the most successful text-based “AI” programs. OpenAI’s ChatGPT has been wildly popular, with over 100 million users at the time of writing. I’ve been using it to practice my French and a friend used it to write copy for his startup. Unlike previous language processors, which were trained solely on text considered relevant to their given task, GPTs are trained on large and generalized swaths of text and only later given specific directives.

Diffusion models, like Stable Diffusion and DALL-E 2, are ANNs that focus instead on generating images. They’re trained on endless images which are all associated with certain text descriptions. They’re then given a “noise” image that essentially looks like old-school television static. From there, they’re instructed to find an image in the noise. If you’ve ever looked at clouds and saw a face, or stared at the ceiling too long and started seeing strange images emerge, you’ve essentially done the same.

Given the way they function, I don’t think it’s fair to call either of these models truly intelligent. They are not sufficiently self-aware. Even if you ask Stable Diffusion or ChatGPT to define themselves, they are not truly, deeply self-referential as an integral part of their makeup. If ChatGPT had spent a great deal of time being trained on itself and conversations it had with others, then trained onward and onward on its reflections (or outputs) on that data, then perhaps it might be slowly heading towards consciousness.

Consciousness, our individual and unique perspective, is what allows creative work to be truly transformative, which is critical when we consider copyright law. In fact, these AIs often spit back content that matches text they were trained on word for word. Most high school plagiarists are smart enough to go in and shuffle things around. It’s easy to tell when AI art has been intentionally guided by an artist into creating a specific aesthetic or when someone has just entered in generic prompts. Engman told SZ Magazin that, “when I added an emotional layer like ‘award winner’ or ‘proud,’ the AI interpreted the image in a new [way].” The AI generator can depict these abstract concepts, but can’t empathize insofar as to experience them. So while many people claim that these AI models are artists, or even curators, I think it’s more appropriate to compare them to any other piece of equipment an artist might use. A paintbrush, a camera.

In the ‘80s, Andy Warhol famously appropriated for his work copyrighted photographs, movie stills, logos, advertisements, and soup cans. Whenever he was sued, he would defend his work as transformative. Not unlike a Catholic mass, wherein a humble wafer is transubstantiated into the literal body of Christ, Warhol claimed he turned these objects into ART.

At the time, this caused a stir, and even now, decades later, his work is controversial; in 2022, the Supreme Court heard arguments from a photographer, Lynn Goldsmith, who claimed she was entitled to compensation for Warhol’s use of her work. The court has not yet published its final decision on the matter.

When it comes to commercial work, imitations are a bit harder to justify. “I think moviemakers might be surprised by the notion that what they do can’t be fundamentally transformative,” Supreme Court Associate Justice Elena Kagan mused during the Warhol arguments. Indeed, a filmmaker cannot just read a book and decide they want to make a movie about it. To ensure that writers are properly compensated for their labor, filmmakers are required to pay for the rights to their stories.

Stable Diffusion and ChatGPT, on the other hand, have not, to my knowledge, compensated anyone for the contributions their intellectual property has made to these models. Artists and writers have had their entire oeuvres swallowed up into behemoth repositories that these models are trained on. They even at times spit out work that is eerily similar to the work of artists that they may have arguably stolen from.

I am a part of the AI training sets. My selfies and my writing have been consumed. There’s a bot that’ll imitate my tweets. A 4chan post in January even accused me of being AI-generated myself. In my younger years I hung around anarchist hacker types who would argue that information wants to be free. They’d say that imitation is only natural; memetic communication is crucial to the evolution of humanity. In my early years on the internet that certainly felt true, watching trends and information spread fungus-like throughout our digital networks. Now I watch corporations skim the cream off my cool kid friends, making big bucks off their free labor on social media.

Caroline Caldwell, a friend who’s an illustrator and a tattoo artist, told me her designs are already frequently lifted by graphic designers creating labels for edgy beer brands or inspirational shirts. She was worried AI will only make this problem worse. She’s barely scraping by, pained and frustrated. For her, art is about making something human, something connective. Of course AI art could be used to make appealing imagery, she said, but could it make something that genuinely made someone feel understood, seen, less alone? We can look at these images as just a collection of pixels, judge them on purely aesthetic appeal, but for so many people, the human element behind the art is actually the most important. It’s not just about looking at an image; it’s about connecting with someone, maybe even feeling the same thing they do.

While ChatGPT is essentially given large collections of texts and left to run wild, only refined later in the processes, models like Stable Diffusion need to be trained on cleaned and calibrated data. Massive amounts of it. This data preparation is usually contracted out to companies who are largely based in the Global South, paying exploitative rates to their employees.Everyone who’s used CAPTCHA has participated in training AI; typing in words, letters, identifying photos of sidewalks and streetlights. For a while, a group of 4chan-based trolls would enter a slur into every CAPTCHA they came across, hoping to maliciously insert the word into ebooks that were digitized using CAPTCHA. OpenAI and other companies have built-in guidelines for AI, but the idea of working with cheap or free labor terrifies me. Who’s to say there isn’t a group of 4chan trolls out there, clicking on children in the hopes that it’ll make Teslas more likely to run them over, purposefully tagging offensive or traumatizing imagery as safe?Why are these companies focusing so hard on generating text and images? These are such inherently human tasks—human neural architecture is already oriented around processing language and imagery. Wouldn’t it be more interesting to look at AI’s potential applications in medical research, safety measures, or risk analysis areas where it can compensate for humans’ homegrown fallacies and shortcomings?AI has been used to improve driving safety, to prevent people from crossing into another lane, to help doctors identify early stage cancer, to improve the efficiency of the power grid and public transportation. Artist and educator Melanie Hoff pointed out that we can reclaim AI for ourselves, that AI’s “structure is a more exciting tool than using preexisting structures and taking what those structures produce.” She explained that “the downside [of ChatGPT and Stable Diffusion] is that people are using large premade datasets…instead of making their own databases and their own AI structures.”Hoff pointed me to a work she made, Partisan Thesaurus, a rudimentary AI that linked commonly associated words; she trained it on two different bodies of text: one set of speeches and writing by Republican, another by Democrats. It lays bare biases which might have otherwise been obscured. She also pointed me to Bomani Oseni McClendon’s work, particularly a piece called Black Health, a book which scraped WebMD to look for references to “Black” or “African.” The results were a disturbing condemnation of the biased nature of the medical field.These works are much more interesting to me than simply exploiting existing content for the sake of making even more content that exists solely to be consumed or to advertise. These pieces use AI to simplify work that might have been challenging and time-consuming for a human, but that is straightforward for a computer, and clarifies things that might have otherwise been obscured. Shouldn’t that be the point of AI?

Journalist Edward Ongweso Jr told me that he’s concerned these tech companies are trying to build a god: “They’re [thinking] if we integrate [AI] into our society, it will uplift us…act as an overseer to organize our resources better and ensure better politics.” Instead of building humanistic systems, designed to improve people’s lives, these AI “gods” will be used to extract further profits, to extract time and attention from humans.AI is a murky area; it doesn’t help that these companies are so secretive. Their algorithms and datasets are all proprietary, carefully guarded. All of my friends who work in the field apologetically told me they couldn’t speak on the record, because their employers forbid it. Given the effect Silicon Valley’s “move fast and break things” ethos has had on our politics, our social lives, our brains, perhaps it’s finally time to slow these companies down, insist that whatever systems they’re creating are ones that are built with humanity in mind, not just further extracting as much wealth as possible from a world that’s stretched so thin.The work of George Washington Carver comes to mind here. You can’t just build a farm, grow the same crops on the same land year after year. You’ll exhaust the soil. You need to rotate through different crops, give something back. Is ChatGPT or Stable Diffusion truly giving anything back?When OpenAI was initially founded, it was intended to be a nonprofit to promote transparency around artificial technology. Instead, it created a for-profit subsidiary that is now even more secretive than Google’s AI research division. While I want to hope that Silicon Valley has learned to temper its speed from the last time it moved fast and broke things, it’s up to the users to advocate for the appropriate use and regulation of this new technology. While in the hands of a capable artist, AI can be used to create something meaningful—even valuable—for society, how many more photos, videos, and texts do we need competing for our attention? What’s the point of avoiding paying an illustrator’s fee to work with an AI that’ll make an image that is by its very nature derivative?

- Text: Liara Roux

- Ai generations: Gavin Park

- Date: April 3, 2023